Ahead of our upcoming webinar on the benefits of AI search technology, we sat down with AIMS API’s co-founder Einar Helde to discuss the complexities of music search and the game changing tools that are transforming workflows for music licensees and licensors alike.

How do you define music search and what makes it so complex?

Music search is about finding the right track that you’re looking for. It’s very complex, because it can be done in so many different ways, some of them more efficient than others. And there is no one silver bullet for doing search.

Curation is closely connected to music search as well. You can see that on all music discovery platforms like the streaming services, for example, the users are now used to getting suggestions on what they will listen to, based on their behavior, based on their tastes, etc. This translates into an expectation of similar recommendations in the sync world. And that’s when you need curation tools that are efficient and are updated with new music coming in.

What are the limitations of traditional search techniques?

Trying to get started and getting started can take a long time if you don’t really know what you’re looking for. Using different search methods can help you get some inspiration in the beginning.

For example, if you have a car commercial, a traditional way of looking for music for it would be by searching for some keywords like “driving rock” track or looking for a track that mentions “driving” or “on the road” in the lyrics, etc. This approach really limits creativity.

But if you can just describe what you want in a prompt, saying “It’s a drive scene, driving through this kind of landscape with a family, driving this kind of car, here’s the mood we’re going for, the lyrics shouldn’t be about having fun, etc.” you’re not limited to the specific keywords. So you’re not searching for “highway to hell” or “on the road” again, and you can find other things that are much more creative. This approach can uncover unexpected relevant tracks that users didn’t even know existed in the catalog.

Beyond that, using different search methods helps users work around several fundamental issues of traditional search techniques that rely solely on keywords:

1. Human Bias

When people tag music, their personal feelings and interpretations come into play. For instance, while a track might sound “relaxing” to one person, it might feel “melancholic” to another. This means searches can give varied results based on who did the tagging.

On top of that, people have different levels of musical knowledge. Some use technical terms in their search, while others describe how the music makes them feel. The result? It’s harder for users to find the perfect track they’re looking for.

2. Lack of Taxonomy Industry Standard

Without an industry-wide standard, different labels resort to various keywords. For aggregators representing numerous catalogues, this translates to having thousands of keywords users can search by with no consistency across the entire repertoire.

Consider this: a search for “happy” might yield tracks from one catalog, but not from another that used “feel good” or “joyful” instead. This makes your music search limited, as you’re only accessing a fraction of available tracks.

3. Binary Nature of Keywords

Keywords operate on a binary system. If you search for “guitar”, for example, you will find every track that has that tag, regardless of the guitar’s prominence in the composition. Whether it’s a lead guitar or just a part of the mix, the search can’t discern the context or significance of the instrument in the song. To address this, different companies have implemented weighting or specific rules to determine which tracks to display. However, these rules are challenging to maintain, update, and ensure they remain logically consistent.

4. Overtagging

Tracks are sometimes tagged with seemingly contradictory terms like “summer” and “Christmas” or “happy” and “sad” to increase their visibility in searches. This is a way to hack the system, ensuring tracks show up more frequently, thus getting the music in front of more clients. However, such practices diminish the quality of the search results. For instance, if you’re searching for “Christmas,” you wouldn’t appreciate results that sound like summery feel-good tunes. Moreover, even if a track can be perceived as both “happy” and “sad”, it’s unlikely it would be a good match for someone’s specific “happy” search.

How is AI technology improving music search?

It activates more of your catalog because it searches across all the music available every time. It speeds up the process because you are no longer served music that is not suitable for your use case, so you don’t have to sift through a lot of irrelevant results to find what you’re looking for. And you can be much more detailed – you can look at more complex searches without having to turn them into keywords or musical terminology, which creates a unique opportunity for non-music pros who might not know all the genres or instrumentations etc.

“It [AI] lowers the barrier for search, allowing more people to find suitable music for their projects fast.”

It lowers the barrier for search, allowing more people to find suitable music for their projects fast. If you are a music expert, AI provides you with an objective starting ground for your initial search, that you can then refine further by applying keywords and various filters on top of it (BPM, release date, favorite labels, licensing-related filters, etc.) to find the track that fits your exact requirements.

Who benefits from this technology?

Both parts of the music value chain benefit from this.

We can start all the way from the production through tagging the metadata with creative tags. AI can be used to move things faster along in the production process. We are taking away a lot of manual work for music companies, such as finding related albums or related artists, and tagging. All of these things are time consuming for any music company and, if done by humans, they will never be unbiased like they would be with using AI technology. Beyond that, powering your catalog with an AI search engine significantly increases its searchability and discoverability of music for the end user.

And then, on the other side, you have people who search for music. They can be internal or external, like music supervisors and editors. In their workflow, things move really fast. Everyday they need to find music, and with the right tools, you can cut the search time up to 90%. They still have to listen to a few tracks to find their favorite, but now it’s the fun part of actually listening to good music and choosing the most fitting track, instead of coming up with the search, thinking about it, refining it, and then listening to a ton of irrelevant suggestions before you get to some good ones.

“With the right tools, you can cut search time up to 90%.”

Curation plays a crucial role in the workflow of these users, and without AI, it cannot be executed as quickly or effectively. That means AI allows you to personalize curation for each client in a much better way than you did before. Because again, you don’t have to listen to music that doesn’t fit your requirements. You just have to select the music that you want your client to hear and that should increase the conversion and the usage.

This technology is great for the pros, but it’s also great for the novices, the non-music pros. If you look at the UGC markets, the video creators around the world are used to searching in certain ways, probably from whatever streaming service they’re using. That’s how they like to search. And with AIMS, you have all those kinds of search methods available in a music catalog as well. So the catalogs will be more usable for non-music experts and they would like using these catalogs more because it’s similar to what they use in their private life.

Taking into consideration current music industry trends, why do these tools matter today and what impact are they having?

The current trend in the industry demands the production of content at an unprecedented pace, in various formats, and across multiple platforms. This has been a long-standing trend, but we are now witnessing an even more rapid acceleration. The growing volume of video content produced for TV, film, social media, and other outlets is placing extraordinary time constraints on those responsible for sourcing music. The need to find suitable tracks quickly and on a larger scale than ever before is more pressing.

“We are experiencing a surge in both the demand for music and its supply, coupled with the expectation for faster content delivery.”

Simultaneously, there is an exponential increase in the creation of new music. Thus, we are experiencing a surge in both the demand for music and its supply, coupled with the expectation for faster content delivery. This scenario results in significantly reduced time to discover more usable tracks from an ever-expanding pool of available music. Navigating this reality without the aid of AI-assistive technology in the workflow has become virtually impossible.

What are the challenges related to music search yet to be solved?

Even though our technology is quite advanced, we are not mind readers (yet). Therefore, we cannot ascertain precisely what you want without your input. However, we believe that combining different data sources with personalization techniques could bring us closer to that goal. This approach is evident in platforms like Spotify and Netflix, which attempt to predict your preferences for watching and listening, presenting these choices directly to you. As people become accustomed to such personalized services, similar expectations will likely extend to professional music search tools in the future.

Another challenge, perhaps more achievable in the near future, is the development of a truly multimodal music search engine. Imagine a search bar that is input-agnostic. This means you could search using various inputs such as text (ranging from simple keywords to complex textual analysis, including lyrics), audio, images, or videos, and still receive relevant music suggestions. While some components of this technology have been built and tested, other aspects still require refinement to be practical and valuable. The key to simplifying music search and making it accessible to everyone lies in integrating these elements into a single, intuitive package that genuinely works.

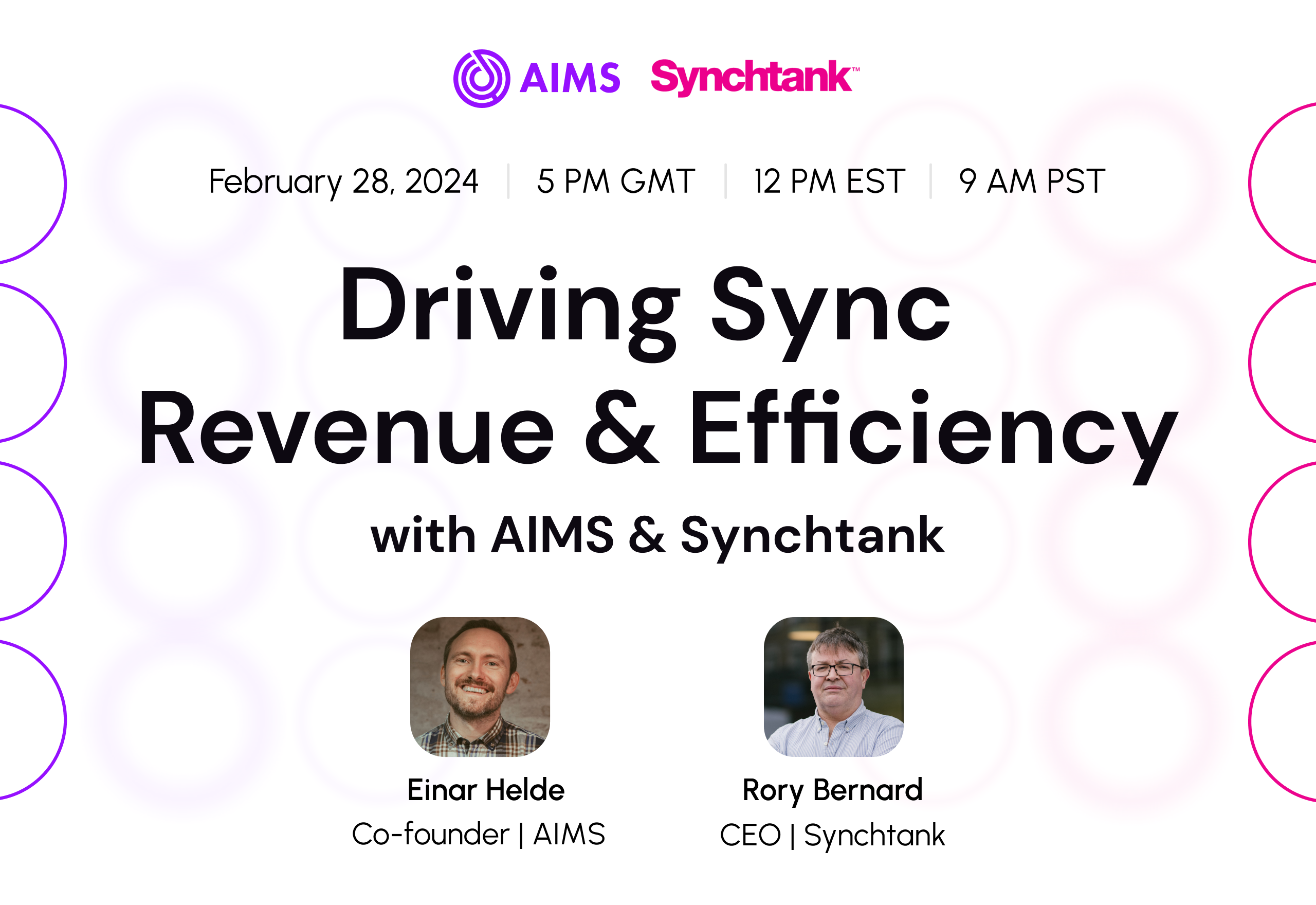

Sign up for our webinar on AI search and more!

Join Synchtank and our partners at AIMS API for a webinar showcasing how our combined solutions are enhancing sync operations and driving business efficiency, revenue, and compliance!

Register here.